Configuration

The first time you start Stream, a config.ini is created in the directory you have specified. Please note that anytime you change the contents of config.ini, you will need to restart the Docker container. You can edit the file directly or by using our Stream Online Config GUI.

# List of TZ names on https://en.wikipedia.org/wiki/List_of_tz_database_time_zones

timezone = UTC

config_change_restart = no

[cameras]

# Full list of regions: https://guides.platerecognizer.com/docs/tech-references/country-codes

# regions = fr, gb

# Image file name, you can use any format codes

# from https://docs.python.org/3/library/datetime.html#strftime-and-strptime-format-codes

image_format = $(camera)_screenshots/%y-%m-%d/%H-%M-%S.%f.jpg

# For example, you may add:

# mmc = true

[[camera-1]]

active = yes

url = rtsp://192.168.0.108:8080/video/h264

# Save to CSV file. The corresponding frame is stored as an image in the same directory.

# See more formats on https://guides.platerecognizer.com/docs/stream/configuration#output-formats

csv_file = $(camera)_%y-%m-%d.csv

Helpful Tips

Please read the tips below to get the most out of your Stream deployment.

| Use Case | Tips |

|---|---|

| All Use Cases | 1) Set Region Code to tune the engine for your specific country(s) or state(s). 2) Set mmc = true if your license includes Vehicle Make Model Color. 3) Set detection_mode = vehicle if you want to detect vehicles without a visible plate. 4) Forward results to ParkPow if you need a dashboard with proactive alerts, etc. |

| Parking | This includes gated communities, toll, weigh bridge and other slow-traffic use-cases. 1) Set max_prediction_delay= to 1-2 seconds to more quickly read a plate.2) Set Sample Rate to achieve an effective FPS of 4-5, since vehicle speeds are fairly slow. 3) Apply Detection Zones so Stream ignores certain areas of the camera view. 4) Forward results to Gate Opener if you need to open a gate. |

| ALPR Inside Moving Car | This includes police surveillance, dash cams, and drones. 1) The merge_buffer parameter was previously used to compensate for vibrations but is now deprecated. Modern versions of Stream handle this automatically.2) Keep Sample Rate at 1-3 ( sample =) so that Stream can process most/all of the frames, especially if vehicles are moving fast. |

| Highway, Street Monitoring | 1) Apply Detection Zones so Stream ignores certain areas of the camera view. 2) Adjust Sample Rate based on the speed of the vehicles. |

Hierarchical Configuration

Config.ini file defines parameters that will be used by all cameras or just one.

When parameters are defined at the [cameras] level, they apply to all cameras. When they are defined at the [[camera-id]] level, they apply to a single camera.

[cameras]

# Parameters for all cameras

regions = fr

image_format = $(camera)_screenshots/%y-%m-%d/%H-%M-%S.%f.jpg

csv_file = $(camera)_%y-%m-%d.csv

mmc = true

[[camera-1]]

# Parameters set only for camera-1

active = yes

url = rtsp://192.168.0.108:8080/video/camera-1

mmc = false # mmc = false is only applied for camera-1

[[camera-2]]

# Parameters set only for camera-2

active = yes

url = rtsp://192.168.0.108:8080/video/camera-2

# Uses mmc = true defined in [cameras]

Some parameters are disabled by default, they are marked with the character # at the beginning of the line.

To activate the parameter, it is necessary to remove this mark, save the file and restart the Docker container.

Parameters

All parameters are optional except url.

active

- Stream processes all the cameras defined in the config file if

activeis set toyes. See example above. - Stream will automatically reconnect to the camera stream when there is a disconnection. There is a delay between attempts.

Once a camera connection is lost because it turns off or because of a network failure, Stream starts trying to reconnect with a small delay of 2 seconds between attempts, gradually increasing it up to max_reconnection_delay (600 seconds by default). For example, if a camera is turned on after 5 hours of being turned off it will take a maximum of 10 minutes for Stream to start processing the video again.

config_change_restart

This option allows Stream to automatically restart when changes are made to the Graphical User Interface (GUI).

When set to yes, the system checks every 20 minutes for any differences between the web configuration and the local configuration. If a change is detected, Stream is automatically restarted to apply the new settings.

detection_mode

Detection of vehicles without plates can be enabled with detection_mode = vehicle. This output uses a different format. The license plate object is now optional. The props element is also optional and is for the object properties (make model or license plate text). The default value is detection_mode = plate.

max_prediction_delay

Set the time delay (in seconds) for the vehicle plate prediction. A higher value lets us capture more frames and can result in higher prediction accuracy.

- The default value is 6 seconds. So by default, Stream waits at most 6 seconds before it sends the plate output via Webhooks or saves the plate output in the CSV file.

- For Parking Access use-cases, where the camera can see the vehicle approaching the parking gate, you can decrease this parameter to say 3 seconds to speed up the time it takes to open the gate.

- In situations where the camera cannot see the license plate very well (say due to some obstruction or because it's a lower res camera), then increasing max_prediction_delay will give Stream a bit more time without rushing to find the best frame for the best ALPR results.

memory_decay

Set the time between when Stream will detect the same vehicle again for a specific camera. This can be useful in parking access situations where the same vehicle may turn around and approach the same Stream camera. After memory_decay Stream will be able to recognize and decode that same vehicle.

- The minimum value would be 0.1 seconds. However, for clarity, it is not recommended to set it so low because if the camera still sees that vehicle again (say 0.2 seconds later), then Stream will count that same vehicle again in the ALPR results. There is no maximum value.

- If this parameter is omitted, Stream will use the default value, which is 300 seconds (5 minutes).

- This parameter has no effect if a vehicle is seen by multiple cameras.

mmc

-

Get Vehicle Make, Model and Color (MMC) identification from a global dataset of 9000+ make/models.

-

Vehicle Orientation refers to Front or Rear of a vehicle.

-

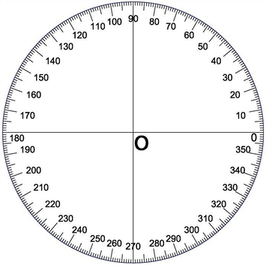

Direction of Travel is an angle in degrees between 0 and 360 of a Unit Circle.

Examples:

- 180° = Car going left.

- 0° = Car going right.

- 90° = Car going upward.

- 270° = Car going downward.

-

The output will be in both CSV file and also via Webhooks.

-

Please note that the Stream Free Trial does not include Vehicle MMC. To get Vehicle MMC on Stream, subscribe.

-

If you have a subscription for Vehicle MMC, then add this line:

mmc = true

regions

Include one or multiple regions based on the camera's location. Best practice is to indicate the top 3-4 states/countries of vehicle plates that the camera will be observing. This optimizes the engine to correctly decode zero vs letter O, one vs letter I, etc. Note that even if a foreign vehicle arrives, engine will still decode that foreign vehicle correctly. See list of states and countries.

sample

The sample setting defines how frequently frames from the camera or video are analyzed by Stream. This helps reduce hardware usage by intentionally skipping certain frames.

- By default, sample = 2, so Stream processes every other frame.

- Set sample = 3 if you want to process every third frame.

- Set sample rate based on the speed of the vehicles. Refer to our FAQ on eFPS for details.

timezone

- You can set the timezone for the timestamp in the CSV and also Webhooks output.

- If you omit this field, then the default timezone output will be UTC.

- See list of supported time zones.

- Plate Recognizer automatically adjusts for time changes (e.g. daylight savings, standard time) for each timezone. Examples:

a) For Silicon Valley, use

timezone = America/Los_Angeles; b) For Budapest, usetimezone = Europe/Budapest. You can also usetimezone = Europe/Berlin.

The timestamp_local field in the output is the time the frame with the vehicle was received by Stream. We are using the Operating System clock and apply the timezone from config.ini.

The timestamp field is the same time in UTC timezone.

The timestamp_camera field is the time the frame with the vehicle was captures by the camera in UTC timezone. The camera records it and sends to Stream with RTCP Sender Report. Some cameras do not send this timestamp at all, or send it with a delay, in which case the value will be set to null.

url

- To run Stream on a RTSP camera feed, just specify the URL to point to the RTSP file.

For example:

or

url = rtsp://192.168.0.108:8080/video/h264If processing a stream using the UDP protocol such as VLC, include this option in the Stream run commandurl = rtsp://admin:[email protected]:8080/video/h264

# where admin is the username and 12345 is the password-e OPENCV_FFMPEG_CAPTURE_OPTIONS="rtsp_transport;udp" - For additional help with RTSP, please go to https://www.getscw.com/decoding/rtsp

- You can also process video files.

Advanced Parameters

Advanced Parameters offer granular control and customization options beyond basic functionalities, enhancing the versatility of Stream

detection_rule

detection_rule = strict(default): License plates detected outside a vehicle will be discarded.detection_rule = normal: Keep the license plates detected outside a vehicle.

dwell_time

Stream can estimate the time a vehicle spends in the area and then send this information out by a separate webhook. To enable this feature, set dwell_time = true. The time is calculated as the difference in seconds between the first and last moments the vehicle was visible.

New in version 1.44.0.

- Dwelling time is part of the MMC Package. To use this feature, you need an MMC subscription.

- The Dwelling feature is not supported in ParkPow webhooks due to internal API restrictions. Ensure that

dwell_timeis not a parameter sent to a ParkPow webhook.

Dwell time works for vehicles without license plates as well. To differentiate between them, timestamp json field is set the same as in the recognition webhook. See webhook data format.

max_dwell_delay

When a vehicle disappears from view, Stream waits for max_dwell_delay (15 seconds by default) before sending a webhook with dwelling time information. This is done to ignore temporary vehicle obstructions. You can set this parameter to a lower value to receive dwelling time faster at the cost of accuracy.

max_dwell_delay should be set to a higher value than max_prediction_delay, ideally at least twice as large.

trajectory

Stream can report the trajectory of a vehicle along with dwell info. To enable that, set trajectory = true.

New in version 1.54.0.

Trajectory needs dwell_time to be enabled.

speed

When speed = true is set Stream can estimate vehicle's speed in km/h (requires MMC subscription).

New in version 1.54.0.

This feature is in beta and is being actively worked on. At the moment speed estimation could be inaccurate. Calibration is fully automatic and requires majority of the vehicles in the scene to be moving along the same direction. Camera also needs to be directed at least a little downwards (instead of parallel) towards the road.

Enabling this feature will substantially increase CPU usage, especially at the start while parameters are being calibrated. This may negatively affect Health Score.

max_reconnection_delay

When Stream cannot reach specified camera url it will repeatedly try to reconnect after a short delay. With every failed attempt the delay will increase exponentially until it reaches the value of max_reconnection_delay = 600 seconds (default). You may set this parameter to lower value if you want Stream to try reconnecting more often.

New in version 1.43.0.

You can set this max_reconnection_delay in the hierarchical configuration as a global parameter or per camera.

Adding the parameter below [cameras] level for global or below a camera section, say [[my-camera-1]], to set its individual maximum reconnection delay.

merge_buffer

Configuration Details (Deprecated in version 1.44.0.)

This feature improves Stream's accuracy when there's vibration in the camera (e.g. camera inside a moving car) or when vehicles are crowded together. This algorithm controls how many time-steps Stream uses to match a new object to an existing one.

This parameter has to be tuned carefully and depending on your specific case. If Stream is missing cars, refer to the table below to adjust the merge_buffer value accordingly, otherwise, it has to be left as default.

| Value | Description |

|---|---|

| -1 | Default value, Stream's engine automatically selects the appropriate value. |

| 1 | Set this value in the following cases: - The camera is mounted in a moving vehicle or subject to vibrations. - The road is very crowded, with a good amount of overlapping vehicles. |

The lower the Merge Buffer value, the more sensitive the engine matches new vehicles/plates. This leads to more CPU utilization. A lower value may also yield more chances to detect false positives.

Deprecated in version 1.44.0.

plates_per_vehicle

This parameter controls the maximum number of plates allowed to be detected per vehicle (default is 1). If you expect multi-plate vehicles, set it to the maximum possible number of plates. Setting plates_per_vehicle = -1 removes any limit from the amount of plates allowed per each vehicle, but could potentially result in false positive detections.

New in version 1.50.0.

region_config

When enabled, it only accepts the results that exactly match the templates of the specified region. For example, if the license plate of a region is 3 letters and 3 numbers, the value abc1234 will be discarded. To turn this on add region_config = strict.

text_formats

List of regular expressions. They are used to guide the engine's predictions.

For example, if you know the output is generally 3 digits and 2 letters, you would use:

text_formats = [0-9][0-9][0-9][a-z][a-z]

Or if you have multiple patterns, you would separate them by comma:

text_formats = first_pattern, second_pattern, third_pattern, ...

The engine may not follow the format when the predictions are too different. If you want to exactly match the regexp, you should also use (also see above):

region_config = strict

New in version 1.53.0.

report_static

By default Stream ignores static (non-moving) vehicles. If report_static = yes is set, Stream will treat them as any other vehicle.

New in version 1.52.0.

Output Formats

Stream can export detection results in various formats. By default, files are saved in the Stream installation directory (where your config.ini is located).

csv_file

Save detection results to CSV format by adding this to your config.ini:

csv_file = $(camera)_%y-%m-%d.csv

Need to customize the filename or output location? Check our FAQ - Custom folders or FAQ - Custom filenames.

jsonlines_file

Save detection results to JSON Lines format by adding this to your config.ini:

jsonlines_file = $(camera)_%y-%m-%d.jsonl

Need to customize the filename or output location? Check our FAQ - Custom folders or FAQ - Custom filenames.

image_format

Save detection snapshots as images by adding this to your config.ini:

image_format = $(camera)_screenshots/%y-%m-%d_%H-%M-%S.jpg

Images are automatically saved when using csv_file or jsonlines_file. To disable image saving, set image_format to empty:

image_format =

If you have a webhook with images, image_format should NOT be empty.

Need to customize the filename or output location? Check our FAQ - Custom folders or FAQ - Custom filenames.

Want to automatically remove old images? See our FAQ.

video_format

Save video clips of detected vehicles by adding this to your config.ini:

video_format = $(camera)_videos/%y-%m-%d/%H-%M-%S.%f.mp4

If you don't need to save videos, you can leave it empty:

video_format =

Need to customize the filename or output location? Check our FAQ - Custom folders or FAQ - Custom filenames.

Currently only .mp4 output format is supported. We may add more formats in the future.

After a video file has been sent out to a webhook, it gets removed from disk. Otherwise, it is kept forever.

Enabling the feature to save video clips not only substantially increases CPU usage but also consumes significant storage space. Prior to enabling this feature, ensure you have spare processing capacity and sufficient available storage.

New in version 1.45.0.

video_before

When video clip is saved, Stream records a few seconds before the vehicle was detected. This time is controlled by video_before parameter. The default value is 5 seconds.

video_after

When video clip is saved, Stream records a few seconds after the vehicle was detected. This time is controlled by video_after parameter. The default value is 5 seconds.

Total video duration could differ slightly from values specified in video_before and video_after due to encoding and frame rate differences.

video_fps

There are two options for video clips frame rate:

video_fps = native (default) records frames at the rate Stream is reading them from source;

video_fps = sample records frames at reduced rate specified by sample parameter.

video_overlay

It is possible to add information overlay to video clips, to gain insight into Stream's operation and simplify troubleshooting or config tuning. This parameter can be set to one or several (separated by comma) of the available options:

all: turns all available overlays on;boxes: draws bounding boxes for vehicle and plates, each vehicle in its own unique color;trace: draws a vehicle trajectory;vehicle_type: adds a vehicle type on top of the vehicle bounding box;dscores: adds detections scores on top of the bounding boxes;confirmed: adds rolling text box with recognition event information in the same color as the vehicle's bounding box; note that it is displayed when Stream is reporting this information, which is usually after the vehicle has left the frame (see max_prediction_delay), so adjust video_after appropriately;mmc: adds vehicle Make, Model, Color, Orientation and Direction information (requires MMC subscription) to theconfirmedoverlay;dwell_time: adds a rolling text box with dwell time information (similar toconfirmed, see max_dwell_delay and video_after);health_score: adds Health Score number to the frame;timestamp: adds time in the specified timezone to the frame and to theconfirmedoverlay, identifying the moment of recognition/dwell events;position_sec: adds internal video second counter useful for video files, similar totimestamp.

Stream can only add overlays to sample frames, so setting video_fps = sample is recommended when using video_overlay.

New in version 1.52.0.

video_codec

There two options for video clips codec:

video_codec = mp4v (default) encodes video clips with MPEG-4 part 2 codec;

video_codec = h264 encodes video clips with H.264 codec.

H.264 encoding requires significantly more CPU and memory resources, which may not yield real-time processing and could lead to abrupt crashes. If crashes occur with video_codec = h264, increase your Docker or WSL resource limits, as these crashes are likely due to RAM limitations in Docker or WSL.

New in version 1.52.0.

Webhook Parameters

This part of the config has been recently changed, but is backwards compatible. See Compatibility with the old webhooks format.

Stream can automatically forward the results of the license plate detection and the associated vehicle image to an URL. You can use Webhooks as well as save images and CSV files in a folder. By default, no Webhooks are sent.

How Webhook are Sent

- If the target cannot be reached (HTTP errors or timeout), the request is retried 3 times.

- If it is still failing, the data is saved to disk or memory, depending on the

cachingparameter.- When a webhook fails, all new webhook data will be cached.

- Every 2 minutes, there is an attempt to send the cached webhooks. If a send is successful, it will also process the cached data and send it if there is any.

- When webhooks are saved to disk, we remove the oldest data when the free disk space is low.

Format and Fields

- Description of the webhook data format.

- To read the webhook message, you can use our webhook receiver (available in multiple languages).

webhook_targets

- To send data to webhooks, you must list them in

webhook_targetscamera property of theconfig.ini. - The recognition data and vehicle image are encoded in multipart/form-data.

- To ensure that your

webhook_targetsendpoint is correct, please test it out at www.webhook.site.

You can send to multiple targets by simply listing all the targets. Each target has its own set of parameters: url, image, image_type (deprecated), request_timeout, caching, header, video, camera_id.

webhook_targets = my-webhook-1, my-webhook-2

Previously webhook_targets were directly specified as URLs and shared parameters. This way is still supported but we recommend using the new format. See Compatibility with the old webhooks format.

Webhook Descriptors

Each webhook you are planning to use should be described in the [webhooks] section of the config.ini file. This section also uses a Hierarchical Configuration structure:

# Webhook targets and their parameters

[webhooks]

caching = yes

[[my-webhook-1]]

url = http://my-webhook-1.site

image = vehicle, plate

request_timeout = 30

camera_id = my-camera-1

[[my-webhook-2]]

url = http://my-webhook-2.site

image = no

video = file

request_timeout = 20

caching = no

url (webhook)

Specifies the URL to send the webhook data to. For example:

url = http://my-webhook-1.site

camera_id (webhook)

Optional camera ID field, if you want a different Camera ID in the webhook data. For example:

camera_id = my-camera-1

image

- This field can be set to either:

image = yes

image = no - When

image = nois set, it will send only the decoded plate information but the image data will not be sent to the target URL. This lets your system receive the plate information faster. This is especially useful for Parking Access situations where you need to open an access gate based on the license plate decoded. - When

image = yesis set, it will send the image data along with the plate information to the target URL. This is equivalent to havingimage = vehicle. - The license plate bounding box is calculated based on the original image when that field is

no. Else it is calculated based on the image sent in the webhook. Please see Considerations for more details.

Starting from version 1.53.0, it is possible, and preferred, to specify the image type(s) directly in the image field, as in:

image = original

image = vehicle

image = plate

image = original, plate

If you don't want image data to be sent, you can specify image = no as usual.

image_type

- This field can be set to either:

image_type = original

image_type = vehicle

image_type = plate

image_type = padded_plate - When set to

original, the webhook will receive the full-size original image from the camera. - When set to

vehicle, the webhook will receive the image contained within the bounding box of each vehicle detected. - When set to

plate, the webhook will receive the image of the plate in the request. - When set to

padded_plate, the webhook will extend the plate image to include part of the vehicle. This is useful for reducing bandwidth requirements while still providing context around the license plate. Added in version 1.58.0 - If necessary, it is possible to combine

image_typeoptions. Any combination is valid:

image_type = original, plate

image_type = original, vehicle, plate

Deprecated in version 1.53.0. Please use the image parameter instead.

Please be aware that, according to the selected image or image_type options, multiple images may be included in a single webhook request. This could lead to increased bandwidth usage.

image Considerations

The webhook bounding boxes are computed based on the selected image types, thus, they might not match with the CSV bounding boxes. The biggest image sent becomes the reference for any smaller image types included in the same request. The image sizes are as follows (from largest to smallest):

- original

- vehicle

- padded_plate

This is the logic that Stream uses to compute image sizes.

- If

imagehas more than one value, e.g.image = original, plate, the largest is used to calculate the bounding boxes, as outlined above, in this case, original will be the base image. - Having

image = vehicle, platewill use vehicle as the base image for bounding box calculations. The smallest possible image to consider is padded_plate. - On the other hand, if

image = nois set, the bounding boxes are always computed based on the original image. image = yesis equivalent toimage = vehicle, so the bounding boxes are computed based on the vehicle image.

The CSV bounding boxes are always calculated based on the original image. This setting only affects webhook events.

For webhooks, only the vehicle and plate bounding boxes are sent. Please refer to the Vehicle information payload format for details. For now, all you need to know is that every bounding box is defined by two points: top-left (xmin, ymin) and bottom-right (xmax, ymax).

Even if only a single image is sent, the rest of the bounding boxes will be calculated based on the largest image sent.

Let's work through an example to illustrate this. Suppose you have the following configuration:

| Image type | Resolution | Absolute position from original |

|---|---|---|

| Original | 3840x2160 | (0, 0) to (3840, 2160) |

| Vehicle | 1920x1080 | (960, 540) to (2880, 1620) |

| Plate | 400x100 | (1700, 1000) to (2100, 1100) |

If you set image = original, the bounding boxes will be calculated as follows:

- Vehicle bounding box: (960, 540) to (2880, 1620)

- Plate bounding box: (1700, 1000) to (2100, 1100)

On the other hand, if you set image = vehicle, the bounding boxes will be calculated as:

- Vehicle bounding box: (0, 0) to (1920, 1080)

- Plate bounding box: (740, 460) to (1140, 560)

image_quality

JPEG quality for webhook images, from 1 to 100 (default 80). The lower the quality the smaller the image size.

New in version 1.58.0.

video

- This field can be set to either:

video = file

video = url

video = no - When

video = fileis set (default), the video clip with the timestamp, license plate (if recognized), and other vehicle information will be sent to the target URL. - When

video = urlis set, Stream will send only the video file information, but the video file itself will not be sent to the target URL. This option is reserved for future use. - When

video = nois set, no video information will be sent to the webhook.

Similar to dwell_time video information is sent separately from recognition data, when video file becomes available. See Get ALPR Results for webhook json format details.

caching

When caching = yes (default value), then all failed webhook requests are saved to disk, and retried later, based on webhooks sending flow. When the disk space runs low, then there is risk of losing undelivered webhooks, because the oldest unsent entries will be pruned.

To turn this off, use caching = no. Stream will keep in memory cache of up to 100 failed requests. If the target URL is down for an extended period of time and caching is disabled, you may lose data as the in-memory cache fills up, as it discards the oldest entry.

If your goal is to record every visit, for instance, in ParkPow, or any parking management system, it is extremely discouraged to disable caching. However, in some use cases, such as automating gate opening, disabling caching is recommended, as any failure, and eventual retransmission can cause unintended gate activations.

New in version 1.29.0.

request_timeout

A webhook request will timeout after request_timeout seconds. The default value is 30 seconds.

header

The webhook request can have a custom header. To set it, use header = Name: example. By default no custom header is used.

Starting with version 1.58.0, the header parameter now accepts multiple values. Multiple headers should be separated by a comma. If a header value contains a comma, it must be escaped with a backslash.

For example,

header = "Authorization: Token ************, Accept-Encoding: gzip\, deflate"

Forwarding ALPR to ParkPow (example only)

- To forward ALPR info from Stream over to ParkPow (our ALPR Dashboard and Parking Management solution), please refer to this example below:

[cameras]

# Global cameras parameters here

[[camera-1]]

# Camera-specific parameters here

webhook_targets = ParkPow-webhook

# Webhook targets and their parameters

[webhooks]

[[ParkPow-webhook]]

url = https://app.parkpow.com/api/v1/webhook-receiver/

image = vehicle

header = Authorization: Token 5e858******3c

camera_id = my-camera-1

- Please note that you must add

headerto send info to ParkPow via webhooks.

Sending Additional Information (GPS)

You can set arbitrary user data in the webhook (for example, a GPS coordinate). It can be updated at any time via a POST request.

- Start Stream with

docker run ... -p 8001:8001 ... - Send a POST request with the user data:

curl -d "id=camera-1&data=example" http://localhost:8001/user-data/- In this example, we set the data for

camera-1. It should match the ID used inconfig.ini. - The user data is set to

example.

- In this example, we set the data for

- When a webhook is sent, it will have a new field,

user_data, with the value set in the previous command. JSONL output will also include this field.

The new data will be included only when there is a new detection. For example,

- at 12:00, you set the user data to some GPS coordinates.

- At 12:05, there is a new detection and it will include the new coordinates.

Compatibility with the old webhooks format

Previous versions of Stream config file used a different format for webhooks, in which multiple targets shared the same parameters:

[cameras]

# Global cameras parameters here

[[camera-1]]

# Camera-specific parameters here

# Webhooks parameters

webhook_target = http://my-webhook-1.site

webhook_targets = http://my-webhook-2.site, http://my-webhook-3.site

webhook_image = yes

webhook_image_type = vehicle

webhook_caching = yes

webhook_request_timeout = 30

webhook_header = Name: example

While the old format is still supported, we encourage you to migrate to the new format, which allows you to specify different parameters for each webhook.

All you need to do is add a [webhooks] section to your config file and move the webhook parameters to the new section. webhook_targets will be specified by webhook names rather than URLs:

[cameras]

# Global cameras parameters here

[[camera-1]]

# Camera-specific parameters here

webhook_targets = my-webhook-1, my-webhook-2, my-webhook-3

# Webhook targets and their parameters

[webhooks]

[[my-webhook-1]]

url = http://my-webhook-1.site

image = vehicle

caching = yes

request_timeout = 30

header = Name: example

[[my-webhook-2]]

url = http://my-webhook-2.site

image = no

[[my-webhook-3]]

url = http://my-webhook-3.site

Unspecified parameters will use the default values, mentioned in the sections above.

Other Parameters

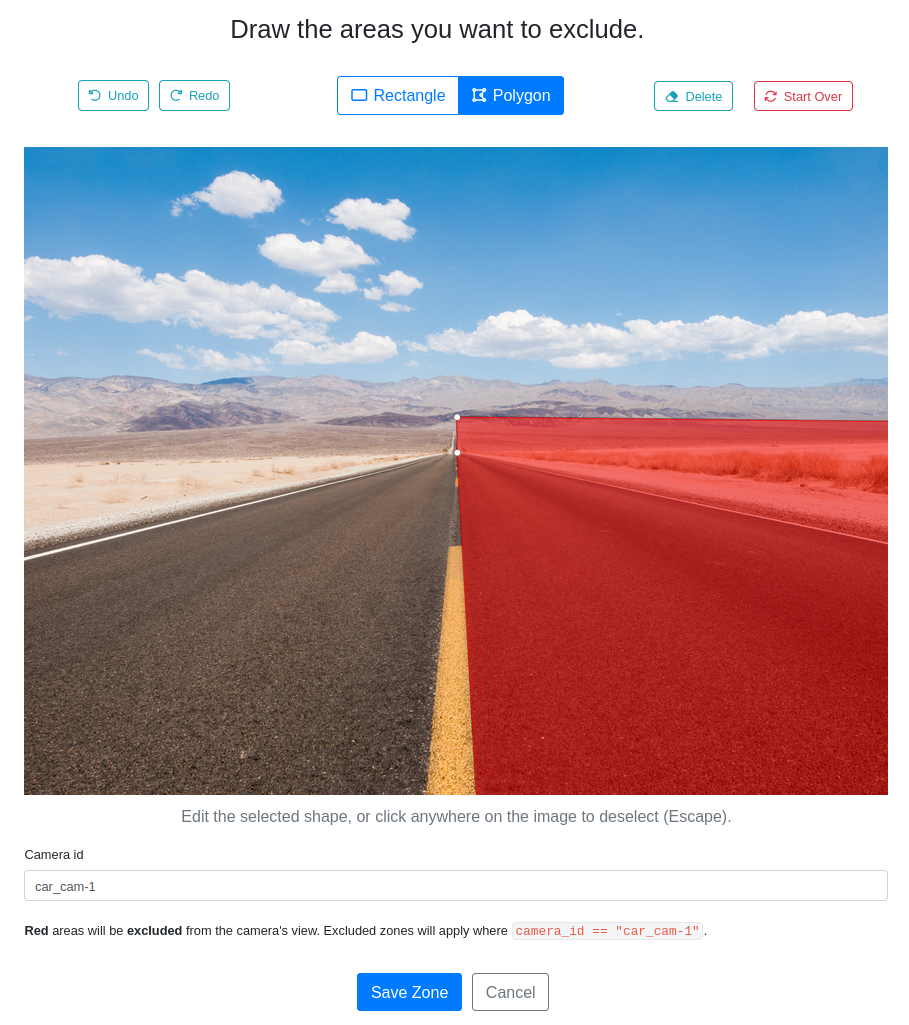

Detection Zone

Detection Zones exclude overlay texts, street signs or other objects. For more info, read our blog on Detection Zones.

- To start, go to our Detection Zone in your Plate Recognizer Account Page and upload a capture from the camera set up on the stream.

- Add the

camera_idparameter. It is between[[]]in yourconfig.iniconfiguration file; make sure they are identical. The image below represents an example whosecamera_idis 'camera_01'. After these steps, click "Add Zone".

[cameras]

# Global cameras parameters here

[[camera_01]]

active = yes

url = rtsp://<rtsp_user>:<rtsp_password>@<rtsp_url>:<rtsp_port>/<rtsp_camera_path>

# More camera parameters below

# ...

-

The system will load the added image and a page similar to the image below will display it, then you can follow the on-screen instructions to draw exclusion areas over the image to determine the zones that will be ignored in the detection. Once you are done, click "Save Zone".

-

Make sure to restart the Docker container and that machine has internet access at the time of the restart to see the effects. When you open your Stream folder, you will now see one file per zone.

Editing and Removing Zone Files

To edit a detection zone, Click Edit on Detection Zone then follow step (3). After this, remove the file zone_camera-id.png from the Stream folder then follow step (4). When you open your Stream folder, you will now see the new file zone_camera-id.png.

To remove a detection zone, click Remove on Detection Zone. Then remove the file zone_camera-id.png from the Stream folder.

Detection Zone Files

After following the steps above, you will have new files in your Stream folder. For example, if you create a detection zone for camera1, you will have an image at /path/to/stream/zone_camera1.png.

You can also manually create a detection zone mask. Here are the requirements.

- Create a PNG image in your Stream folder. Check the file permissions; it should be readable by your Docker user.

- Name the file

zone_camera-id.png(replacecamera-idwith the ID of your camera). - Use only two colors: black for masked areas and white for everything else.

- The image resolution should match the resolution used by the camera.

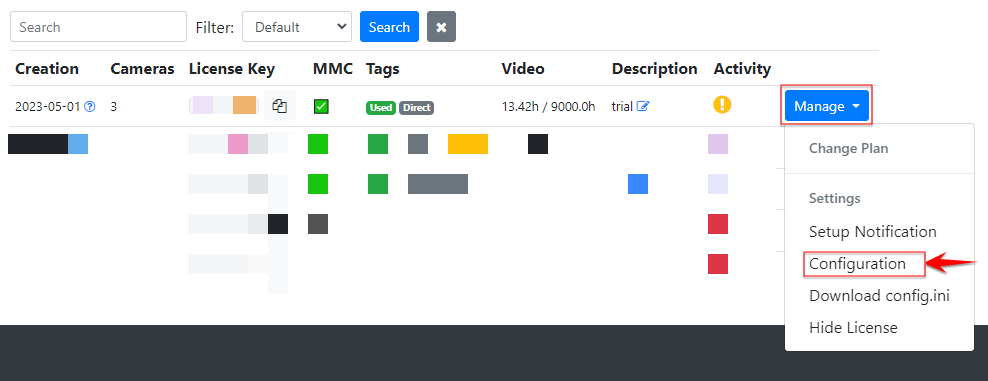

Configuring Stream Using the Graphical User Interface (GUI)

Stream can automatically create the config.ini file with the specified parameters you define in the Plate Recognizer app within the Stream working directory.

Just click the Manage drop-down of the license key you want to configure, and in the Settings category, click on Configuration:

Then follow the configuration form and save changes:

Once you run the container, you should be able to see the autogenerated config.ini file within the Stream working directory.

If you open it with your text editor, you should see a comment on the top of the file:

# ...

# File generated by platerecognizer.com

# If you do local changes, Stream will STOP downloading config.ini from the website.

# Prefer making changes via: https://app.platerecognizer.com/stream-config/<correspondent_license_key>/

#...

config_change_restart = no

The GUI configuration method is useful the first time you run the container or in case the config.ini file is absent from the Stream working directory.

If you didn't define the configuration on the Plate Recognizer app before running the container for the first time, Stream will create a standard demo config.ini file as it does normally.

Steps To Update Stream Configuration from GUI:

- Make the configuration changes you want to apply to the container linked to the corresponding license key and save it.

- Go to the container's PC/Server console.

- Stop the Stream docker container by running the command:

docker stop stream - Browse to the Stream working directory:

cd path/to/stream/path - Delete the current

config.inifile by running the commandsudo rm config.ini - Run the docker container again:

sudo docker restart stream

After that, your changes should be reflected in the new config.ini file.